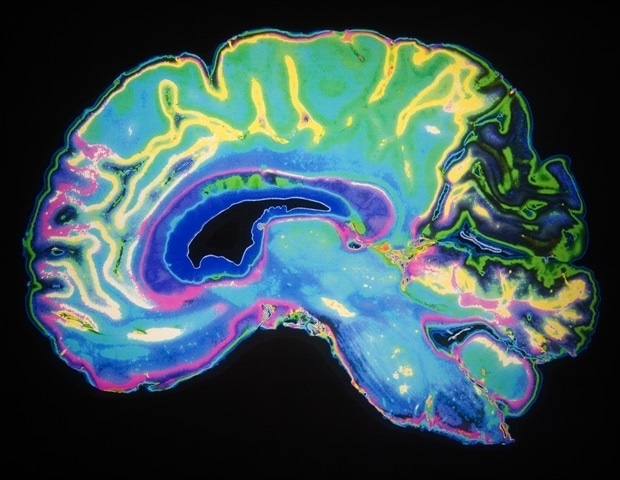

In a recent study published in Nature Communications, researchers performed high-resolution, micro-electrocorticographic (µECoG) neural recordings for speech decoding to improve speech prostheses.

Study: High-resolution neural recordings improve the accuracy of speech decoding. Image Credit: fizkes/Shutterstock.com

Study: High-resolution neural recordings improve the accuracy of speech decoding. Image Credit: fizkes/Shutterstock.com

Background

Communication ability and quality of life may be impacted by neurodegenerative disorders such as amyotrophic lateral sclerosis (ALS). By deciphering brain impulses, neural speech prosthesis might restore communication.

Current approaches, however, are hampered by coarse recordings that fail to capture the complex spatiotemporal structure of human brain activity. Although computer-aided technologies have shown promise in enhancing quality of life, they are sometimes hampered by sluggish processing and inefficiency.

High-resolution brain recordings may allow for precise decoding of spoken information, paving the way for effective prosthesis development.

About the study

In the present study, researchers discussed using high-density micro-electrocorticographic (µECoG) arrays to improve the accuracy of speech decoding for individuals with motor disorders such as ALS and locked-in syndromes.

During speech production activities, the researchers recorded speech neural activations from the spinal cord utilizing liquid crystal polymer thin-film (LCP-TF) ECoG arrays. The electrodes deciphered speech by anticipating spoken phonemes from high-gamma band (HG) brain activations.

To corroborate the findings, the high-density ECG data were compared to neural speech decoding from regular intracranial electroencephalography (IEEG). Four individuals, one female, with a mean age of 53 years, were studied for speech-decoding brain activity at a micro-scale.

Two variants of the liquid crystal polymer-thin film ECoG electrode arrays were employed, with ≤57 more electrode density than macro-sized electrocorticography arrays and ≤9x more density than high-density electrocorticography arrays.

Individuals S1, S2, as well as S3 received surgery for movement difficulties, and 128-channel array implants were tunneled through burr holes during deep brain stimulation (DBS). The study of spatiotemporal activations unique to speech production units (articulatory properties of particular phonemes) was conducted.

Normalized HG activity was averaged over trials focused on non-word utterances’ first-position phonemes. Similar population-driven articulator patterns were found for ECoG HG in a cortical state space.

The researchers determined if this arrangement was spatially dependent. Using supervised linear discriminant modeling (LDA), a 20-fold, layered, cross-validated decoding model of manually aligned phonemes based on a low-dimensional subspace was applied.

Each ECG electrode’s univariate phoneme decoding performance was evaluated, together with the matching HG-ESNR (dB) value.

Subjects S1 and S2 were used to test the effect of spatial resolution and spatial coverage of neural signals on decoding since they both possessed the required SNR and amount of data for their decoding analysis.

Without previous knowledge of the statistical connection between phonemes (phonotactics), a phoneme generation approach was used to decode positional phonemes within the speech. HG activations from all critical ECoG electrodes were sent into an ‘encoder-decoder’ recurrent neural network for each phrase.

Results

Compared to macro-ECoG and SEEG, the researchers got brain signals with 57 times higher spatial resolution and 48% higher signal-to-noise ratios, which enhanced decoding by 35% compared to standard intracranial signals. The high spatial resolution of the neural interface was required for accurate decoding.

Non-linear decoding models that employ improved spatiotemporal neural information outperformed linear approaches.

During speech articulation, there was a significant change in spectro-temporal brain activations, including significant HG band power increases. Compared to typical IEEG recordings, neural signals obtained from ECoG recordings had a 48% increase in the observed signal-to-noise ratios, as indicated by the correlation coefficient of the high-gamma band envelope (-500.0 millisecond to 500.0 milliseconds before spoken utterance commenced) between the micro-electrode pairs.

The neural high-gamma band activations showed spatial fine-scale tailoring across the arrays, demonstrating that the speech-informative-type electrodes might be grouped spatially.

At scales <2.0 mm, speech information included in high-gamma band neural activations was spatially discriminative. Concerning the commencement of a verbal utterance, all four people had diverse spatiotemporal patterns for four different articulators.

Standard clinical recordings were surpassed by 36% by the ECG arrays, indicating their potential to capture higher SNR micro-scale activations and decipher speech.

Linear model fits revealed modest but substantial correlations for S1, S2, and S3, as well as a strong association for S4. Systemic mistakes were seen in high-density ECoG decoding, mainly related to speech articulatory structure.

With increased spatial resolution, decoding performance improved dramatically. Decoding accuracy declined as the predicted contact size increased, with the lowest contact size yielding the maximum precision.

The spacing necessary to achieve full array decoding capability was inversely related to the number of uniquely decoded phonemes, demonstrating that the value of employing high-resolution brain recordings grows as the number of uniquely decoded phonemes rises. As models scale up, high spatial sampling becomes even more essential.

Conclusion

Overall, the study findings showed that high-density LCP-TF ECoG arrays enabled neural speech prostheses to decode speech with high quality.

Micro-scale recordings demonstrated more accurate speech decoding than typical IEEG electrodes and provided a better understanding of the neurological processes of speech production.

The findings support using high-density ECoG for brain-computer interfaces to recover speech for individuals with motor impairments who have lost verbal communication abilities. Future work might involve creating automatic co-registration algorithms and building ECoG grids with fiducial markers.